Composable IoT

Composable IoT will be your guide in the wonderful world of the Internet of Things (IoT) and how to create applications for it. The term composable is used because we will create applications from many different services. Some are provided as SaaS (software as a service) or PaaS (platform as a service) where you write some code yourself.

There are no generally accepted rules that govern how you create IoT applications although I do think the application should be created from smaller building blocks, hence you often hear the term microservices. In this book, I use the term microservices loosely, mainly to indicate that the application is comprised of several smaller parts. I do not necessarily follow specific architectural patterns, for instance as described at http://microservices.io. I also do not want to imply that this approach is necessarily the one to follow in all cases. In this book, microservices can be containerized pieces of code written with Node.js or Go but they can also be Azure Functions or easy to use cloud services such as Event Hubs or IoT Hubs.

Data Pipelines

When writing applications that work with IoT data, it helps to take a step back and think about the flow of your data. This flow of data is called a data pipeline and is just a series of steps as illustrated below. In this book, we are mainly concerned with raw device data which is streaming data. In other words, devices generate a stream of data (or events) and we will act on that data as it comes in. A device can send data at specific intervals or when an event of interest takes place such as motion detected by a motion sensor.

In this book, we are mainly concerned with raw device data which is streaming data. In other words, devices generate a stream of data (or events) and we will act on that data as it comes in. A device can send data at specific intervals or when an event of interest takes place such as motion detected by a motion sensor.

With potentially hundreds or thousands of devices sending data, a scalable ingestion mechanism is required. In IoT scenarios, the ingestion mechanism usually provides a multiconsumer queue for downstream applications. In contrast with more traditional queues, the messages on the queue are not deleted when read by a consumer. The messages are kept in the queue for an amount of time that you choose. It is the application's responsibility to keep track of the messages that have already been read.

Downstream applications read from the multiconsumer queue at their own pace and process the data. Processing the data can be as simple as storing the data in a file store or database but can also entail checking for anomalies and sending alerts.

In the last step, processed data is made available to the user by means of an application or by integrating the data into another system.

Content Overview

This book will be light on theory and heavy on examples. Let's take a look at what we will build, shall we?

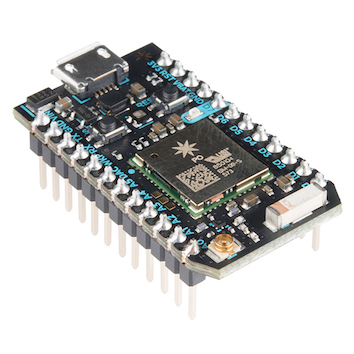

Before we get ahead of ourselves and start writing code to do something meaningful with streaming data generated by a device, we need to cover some basics at the device level. There's a myriad of devices out there that anyone can start with. For this book, I have selected the Particle Photon to get started easily.

The Particle Photon, as shown above, is very easy to work with thanks to the Particle Cloud and the associated development tools. You will program the device straight from the browser and update the device code over the air (OTA). The device code you will write is by no means of production quality. You can fill a separate book with information about writing stable device code, how to interface with quirky sensors, how to secure data on the device and so on. This book will not teach you that. For those that do not want to work with devices, some chapters will use a device simulator that generates random data for a set of dummy devices.

There will be cases where the architectural choices made by Particle will get in the way of what we try to achieve. That is especially the case when we will try to talk to cloud services securely, bypassing the Particle Cloud. In those cases I will use other devices such as the MKR1000 from Arduino and the Adafruit WICED WiFi Feather. These devices make it relatively easy to connect securely to cloud services because of their hardware and the associated libraries. More details about these devices can be found in Chapter 2.

When you have a device or simulator that generates data, where do you send that data? In this book, we will use the ingestion components of the Azure IoT Suite. You will see how to create an IoT Hub from the command line and then use the Particle Cloud to forward data to it with the help of a Particle Cloud integration. We will also see how to forward data directly to IoT Hub without the Particle Cloud integration and we will write code on the device that sends data to IoT Hub using HTTP and MQTT.

To process the data, you will use several other Azure components such as Stream Analytics, Functions and custom microservices. Stream Analytics usually processes messages one at a time and forwards them to another system such as a database, a data lake or reporting system such as Power BI. Alternatively, you can apply windowing to the messaging stream and for instance average values over a specific time window. If devices send data every minute, but you are only interested in averaged values over 10 minutes, you can do that directly in Stream Analytics. Functions allow you to write code without having to think about servers and other infrastructure components such as containers. The only thing that matters is your code and Azure Functions takes care of the rest. Functions can be connected to a stream of data coming from IoT devices and then take custom actions on the data. If you want to send alerts when certain values are over or under a threshold, Azure Functions is one way of doing just that!

When I say custom microservices, where and how do we run them? Services will be written with Node.js or Go and packaged in Docker containers. These containers have to run somewhere and we will use both Rancher and its own Cattle orchestrator and Azure Container Service with Kubernetes. Rancher and Cattle will require you to setup Linux hosts (we'll use Ubuntu) with Docker where Azure Container Service will take care of that for you. In the first chapter, I will show you an even simpler way of running a container with Azure App Service.

Naturally, all code will be in a version control system and this book uses GitHub. Containers will be built and deployed automatically using some CI/CD tool and this book uses Shippable and Visual Studio Team Services.

In the next chapter, we will start with a simple example application that touches on most of the components that were described above. In subsequent chapters, we will delve a bit deeper.

Disclaimer

This book is written as a fun side project. Although I have done my best to avoid errors in both the text and the code, it is entirely possible, actually highly likely, that they are there. If you spot those errors, do not hesitate to leave a comment.